In this blog, you will learn to load data from the Azure storage account to snowflake automatically using Snowpipe.

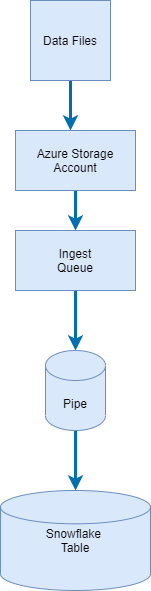

Process Flow

1. Prerequisite

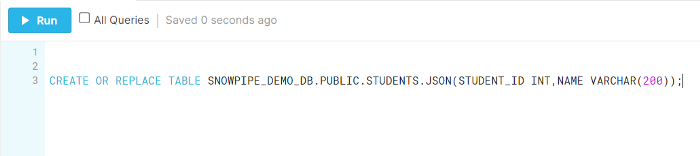

Assume, we have a table in our Snowflake’s Database, and we want to copy the data from Azure Blob Storage into our tables as soon as new files are uploaded into the Blob Storage.

2. Facilitate Azure Services and Snowflake to build a data pipeline to auto-ingest files from Azure Blob Storage into Snowflake’s table.

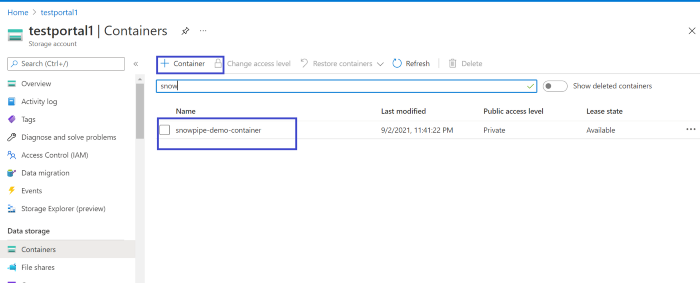

Create a storage account under the resource group. Example: “testportal1 “

2.1 Create a container (Azure)

Example: “snowpipe-demo-container”